Prof. Shimon Ullman and research students Daniel Harari and Nimrod Dorfman of the Institute’s Computer Science and Applied Mathematics Department

set out to explore the learning strategies of the young brain by designing computer models based on the way that babies observe their environment. The team first focused on hands: Within a few months, babies can distinguish a randomly viewed hand from other objects or body parts, despite the fact that hands are actually very complex – they can take on quite a range of visual shapes and move in different ways. Ullman and his team created a computer algorithm for learning hand recognition. The aim was to see if the computer could learn independently to pick hands out of video footage – even when those hands took on different shapes or were seen from different angles. That is, nothing in the program said to the computer: “Here is a hand.” Instead, it had to discover from repeated viewing of the video clips what constitutes a hand.

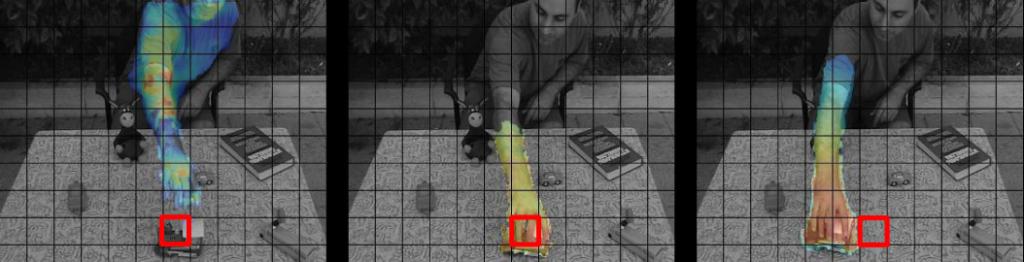

After designing and installing an algorithm for learning through detecting mover events, the team showed the computer a series of videos. In some, hands were seen manipulating objects. For comparison, others were filmed as if from the point of view of a baby watching its own hand move, or else movement that did not involve a mover event, or typical visual patterns such as videos of common body parts or people. These trials clearly showed that mover events – watching another’s hand move objects – were not only sufficient for the computer to learn to identify hands, they far outshone any of the other methods, including that of “self-movement.”

But the model was not yet complete. With mover events, alone, the computer could learn to detect hands but still had trouble with different poses. Again, the researchers went back to insights into early perception: Infants can not only detect motion, they can track it; they are also very interested in faces. Adding in mechanisms for observing the movements of already detected hands to learn new poses, and for using the face and body as reference points to locate hands, improved the learning process.

In the next part of their study, the researchers looked at another, related concept that babies learn early on but computers have trouble grasping – knowing where another person is looking. Here, the scientists took the insights they had already gained – mover events are crucial and babies are interested in faces – and added a third: People look in the direction of their hands when they first grasp an object. On the basis of these elements, the researchers created another algorithm to test the idea that babies first learn to identify the direction of a gaze by connecting faces to mover events. Indeed, the computer learned to follow the direction of even a subtle glance – for instance, the eyes alone turning toward an object – nearly as well as an adult human.

The researchers believe these models show that babies are born with certain pre-wired patterns – such as a preference for certain types of movement or visual cues. They refer to this type of understanding as proto-concepts – the building blocks with which one can begin to build an understanding of the world. Thus the basic proto-concept of a mover event can evolve into the concept of hands and of direction of gaze, and eventually give rise to even more complex ideas such as distance and depth.

This study is part of a larger endeavor known as the Digital Baby Project. The idea, says Harari, is to create models for very early cognitive processes. “On the one hand,” says Dorfman, “such theories could shed light on our understanding of human cognitive development. On the other hand, they should advance our insights into computer vision (and possibly machine learning and robotics).” These theories can then be tested in experiments with infants, as well as in computer systems.

Prof. Ullman is the incumbent of the Ruth and Samy Cohn Professorial Chair of Computer Sciences.

_vs_human_performance(green).JPG)